I’m Back

New Code:

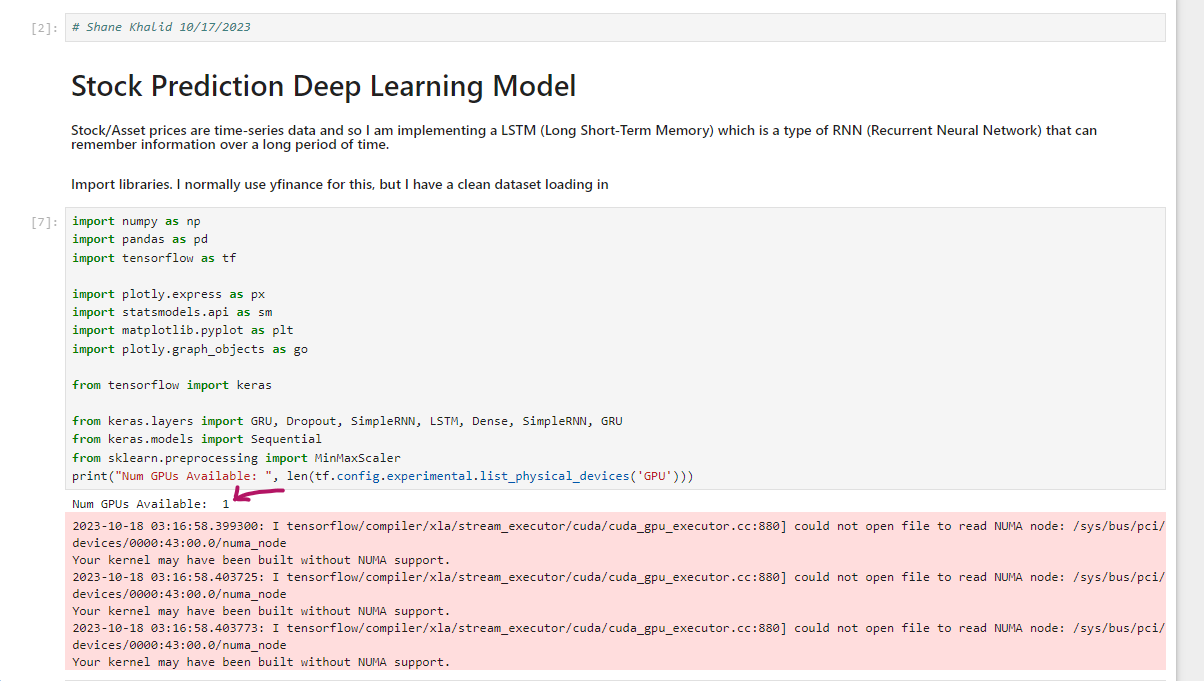

Stock Prediction Deep Learning Model

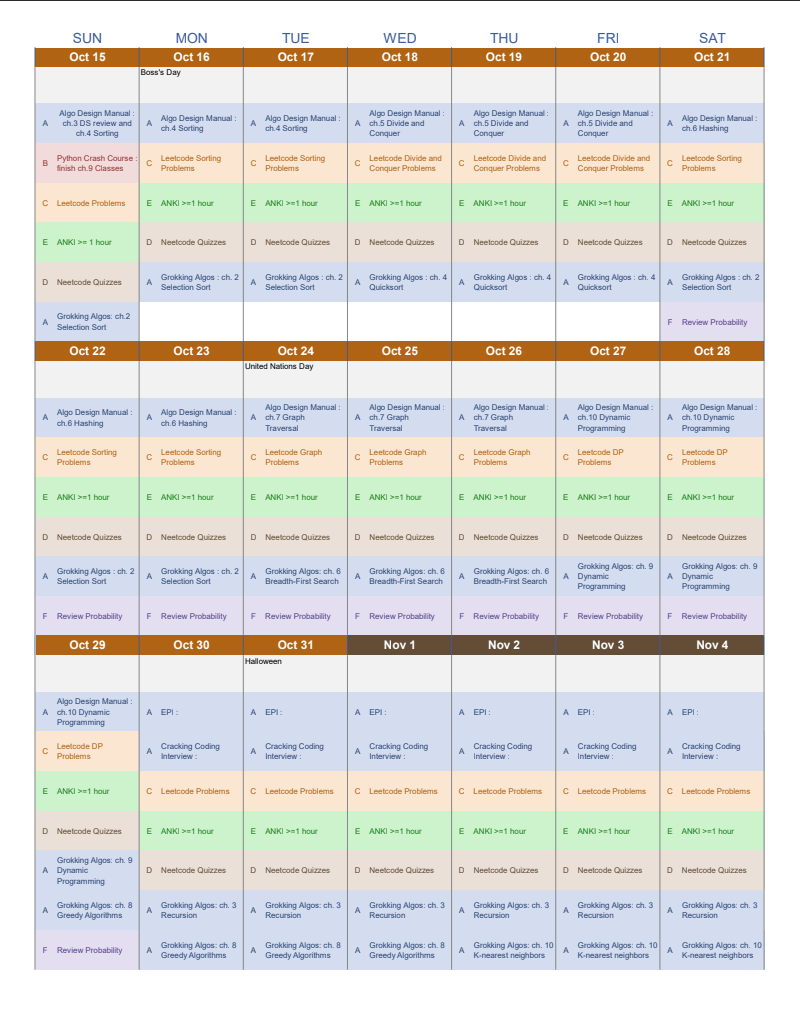

It’s been a real while since I’ve updated this blog. Namely because I was working full-time at another gig. I am making the transition into Software Engineering/ ML NLP Engineering officially as of right now. This means starting from the basics ie. Data Structures and Algorithms in addition to doing LeetCode problems. A good friend of mine (and former-roommate) who worked at Goldman Sachs as a Software Development Engineer told me that he did 700+ LeetCode problems to prepare for his interviews. Goldman Sachs has an eight interview process for Software Development Engineers. I have only completed the first “interview” which was really just a HackerRank Online Assessment. All of my test cases passed, but I have yet to hear from Goldman Sachs despite sending them emails almost every single day.

But that’s okay. Even if I moved on to the next stage, I need to be amply prepared because they are live coding interviews. So, this has been my primary ‘job’ so to speak, meaning that I am fully invested. Here’s my Regimen, and Leuchtturm A4 notebooks: one for DS and Algos, the other for Leetcode. I used a label maker to label them.

- My Study Regimen:

- My Notebooks:

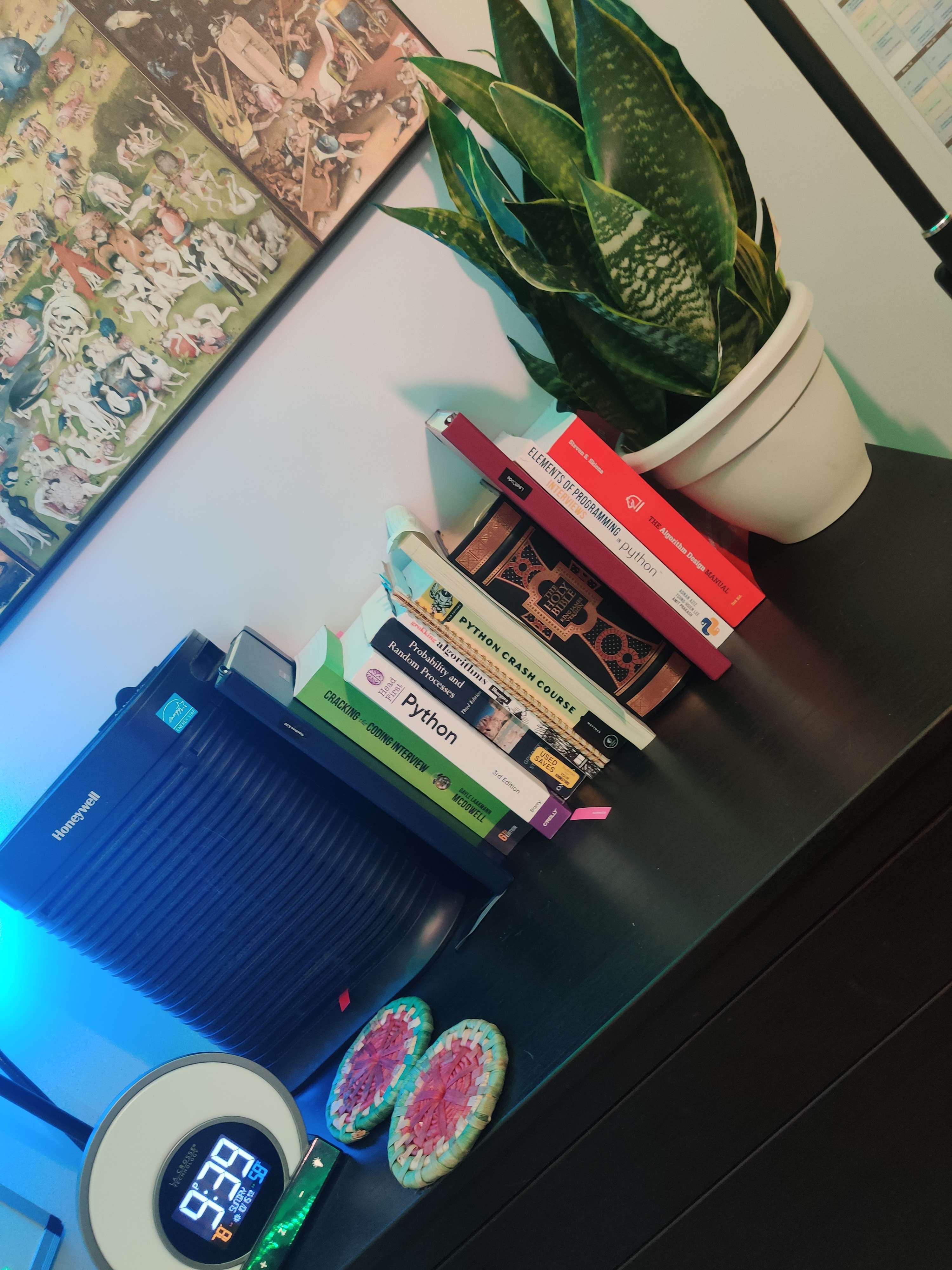

- My CS Books:

And here’s my wall. Not coding-related, but I’ve put up quite a bit of art.

-

Learning all of material formally and doing Leetcode problems are the meat and potatoes of my day. When I do have some extra time, I work on keeping my portfolio updated. Much of the code that I wrote, I wrote before November 2021 when I got hired for a full-time role. So now I just have to run my code and fix all of the deprecated modules, syntax, and libraries. It’s quite annoying, but not quite as annoying as:

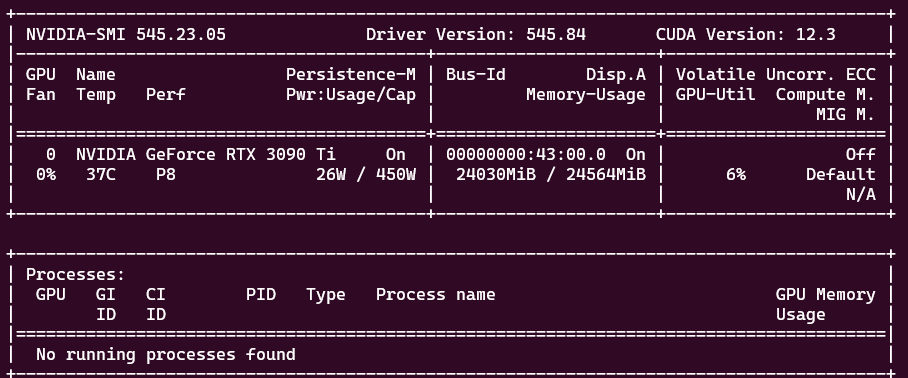

Tensorflow No longer supports GPU Hardware Acceleration!!

Considering I built my computer with a high-end graphics card specifically to do Deep Learning, this was quite a disappointment. However, I did find a work-around. If you have a CUDA-enabled graphics card, which I am lucky enough to have, you can still use Tensorflow GPU through WSL2 (Windows Subsystem for Linux). Through this, I installed Ubuntu, and finally (after many many hours of troubleshooting) I was able to get it to detect my GPU and let me use it for Tensorflow’s back-end.

- Detecting GPU in terminal:

- Detecting Back-End:

When running some DL models, I noticed the speed was incredibly slow despite using my GPU. I noticed using NZXT Cam that my GPU’s load would never exceed 25%. The time it took to run through each epoch was ~1500ms. Thankfully, this was fixed by simply increasing the batch size. This is actually a scenario in which it’s worse to not have a high GPU load.

I’ll be honest, I didn’t experiment with many batch sizes. I just chose one outrageously high (from 32 to 3200) just to see if it raised GPU Load and it did. But 3200 is something I will likely change during its next incarnation. I’m going to read up on it. Batch Size Choice and Batch Size Performance.

So today I took a dataset for prices of Google stock and split them into Train (2010-2022) and Test (2023). There’s a lot of talk about how to best split training and testing sets. When I first learned Machine Learning I was doing research at Columbia University Medical Center, working with tiny datasets. We also had no GPU’s so it would take 27-30 hours to run through 100 epochs. The latter can be fixed with a dedicated GPU, but the former… I think it just left a strong impression on me so I definitely have 20x more in the training set. So that’s what I used.

It’s kind of annoying that github doesn’t properly display ipnynb’s. None of the graphs or visuals make it. Fortunately, there’s nbviewer. So here it is, the first code mini-project I’ve had in 2 years.

Stock Prediction Deep Learning Model

Intra-Day Trading

So I’ve gotten back into this as of late. Using much less capital. Doing the GBPJPY on my friend’s tutelage. The prices have been around their all-time high so shorting is the way to go.

That’s it for now. Will be updating this blog moreso as I grow along this career path and able to provide potential employers with a motivation to hire me. Oh and will be updating/writing new coding projects - the next one will be on NLP and Sentiment Analysis. I also will be updating my portfolio and online CV. That’s it for now though. Oh yeah, and I have a QT baby.

{

params = [weights_hidden, weights_output, bias_hidden, bias_output]

def sgd(cost, params, lr=0.05)

grads = T.grad(cost=cost, wrt=params)

updates = []

for p, g in zip(params, grads):

updates.append([p, p-q * lr])

return updates

updates = sgd(cost, params)

}